Last remarks

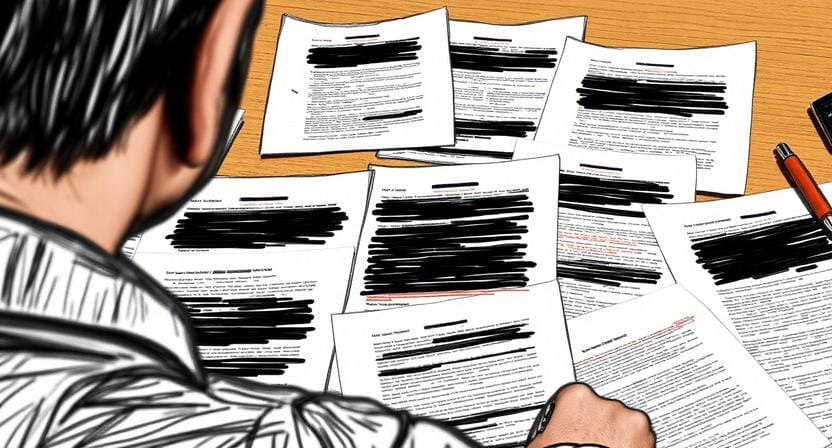

In closing, I’d like to highlight that this here is not the full picture. There is much more to say, but I cannot publish the relevant evidence without serious risk.

A lot falls under confidentiality and that puts me in an awkward position. It leaves me only the published data to build a case. That’s not ideal, but I think my argument is rather convincing nonetheless—especially because the published professional judgments of the very people I criticise solidifies it (on every point of attack, I might add). If nothing else, this is a clear indication that there is something seriously questionable about the implicated results.

But the situation is not really about data; it’s about the systemic rejection of responsibility, which betrays one of the most important scientific principles—and for that, too, there is proof, though I cannot show it. But rest assured, I do have everything necessary to detail the story’s other aspects. I have evidence that shows the issues were known before I ever entered the department. I can prove that key figures attended meetings on these problems, misunderstood basic principles of the employed experimental approaches, and that repeated data requests were unsuccessful. Furthermore, I have proof of confidential information being inappropriately forwarded, and of superiors instructing inappropriate workplace behaviour, which goes directly against good scientific practice. I can show that a large group of scientists knew about the situation, yet did nothing, and that the university’s ombudsman contact information was inaccurate for a prolonged period (including copies of the unanswered contact requests). None of this was obscure, none of it hidden. There’s also further data that is unpublished, but relevant—not to mention my own results. These are largely uninteresting (or even non-existent because I did not save measurements without any signal), but there is one recording that shows something crucial, at least in my opinion.

I also have a backup of all relevant correspondence, including the country’s authority on scientific integrity and all the forwarded information. It documents the narrow limitations within which reporting must occur to even be considered in the first place, and how inappropriate these limits are for complex, large situations. It entails my protest against an openly ridiculous approach, and shows the commission consisted largely of members from drastically diverging fields. I’d argue that raises a question about their (non-)expertise, but then again, they did verify my concerns. They just didn’t want to look any further.

It is difficult, then, to understand who is responsible. Obviously, the department itself failed at many discrete functions, from on-boarding (that ensures appropriate expertise), over data scrutiny and retention, to scientific duties in general. It’s clear who’s responsible here, and in my opinion that part weighs the heaviest. But it didn’t stop there and it must be contemplated where, how, and why the system failed at so many other places; so consistently. That review will be gruelling, but I honestly believe it is necessary. The situation is too large to consider individual failings. Something must underpin them, something that affects academia as a whole.

Were it up to me, I’d start by looking at changes in the system over the past few decades. I actually did that by briefly analysing the student-to-supervisor ratio of the country’s largest universities (based on the government’s public data). It did reveal a clear trend. Care to guess?

One could also question changes in the academic population’s mental acuity over time, e.g. by analysing mental health issues. That might reveal a significant portion being constantly and excessively stressed, which I’m sure has nothing to do with the grant-based funding that prevents stability and security. Or the constant pressure of publishing forever more, forever faster, with forever rising impact, so as to keep one’s career above water. (I should note that the here concerned findings were published before the pandemic. I base my opinion on these matters on information available at the time. Though the interest in the mental health of scientists has since significantly increased, I doubt the lived situation has improved much.)

Then there’s the technologically driven acceleration of life in general, and the consequently more rapid publishing of material. Today, it is standard procedure to include results still under peer-reviewer scrutiny, as publications are uploaded to pre-print servers. Not to mention paper mills, or the impact that will follow from AI (use).

These are just a few considerations. There are certainly more relevant factors, but it seems obvious that all have a crucial commonality: They increase pressure and produce an environment that cannot sustain real research. Science is a slow virtue. It needs careful, deliberate, oftentimes tedious reflection; a deep understanding that can take decades to manifest. Anything that is rushed and unreliable, causes far more damage than visible at first. My story is a good example of just how much effort it takes to identify and understand problematic data. And how impossible it is to correct it, even when it’s obvious.

The scientific process has been honed for centuries, and for good reason. Right now, it is under serious threat. If it is to survive, it must obey its own rules and ruthlessly expel those who ignore them—not those who enforce them.